A new buzzword is making waves in the tech world, and it goes by several names: large language model optimization (LLMO), generative engine optimization (GEO) or generative AI optimization (GAIO).

At its core, GEO is about optimizing how generative AI applications present your products, brands, or website content in their results. For simplicity, I’ll refer to this concept as GEO throughout this article.

I’ve previously explored whether it’s possible to shape the outputs of generative AI systems. That discussion was my initial foray into the topic of GEO.

Since then, the landscape has evolved rapidly, with new generative AI applications capturing significant attention. It’s time to delve deeper into this fascinating area.

Platforms like ChatGPT, Google AI Overviews, Microsoft Copilot and Perplexity are revolutionizing how users search and consume information and transforming how businesses and brands can gain visibility in AI-generated content.

A quick disclaimer: no proven methods exist yet in this field.

It’s still too new, reminiscent of the early days of SEO when search engine ranking factors were unknown and progress relied on testing, research and a deep technological understanding of information retrieval and search engines.

Understanding the landscape of generative AI

Understanding how natural language processing (NLP) and large language models (LLMs) function is critical in this early stage.

A solid grasp of these technologies is essential for identifying future potential in SEO, digital brand building and content strategies.

The approaches outlined here are based on my research of scientific literature, generative AI patents and over a decade of experience working with semantic search.

How large language models work

Core functionality of LLMs

Before engaging with GEO, it’s essential to have a basic understanding of the technology behind LLMs.

Much like search engines, understanding the underlying mechanisms helps avoid chasing ineffective hacks or false recommendations.

Investing a few hours to grasp these concepts can save resources by steering clear of unnecessary measures.

What makes LLMs revolutionary

LLMs, such as GPT models, Claude or LLaMA, represent a transformative leap in search technology and generative AI.

They change how search engines and AI assistants process and respond to queries by moving beyond simple text matching to deliver nuanced, contextually rich answers.

LLMs demonstrate remarkable capabilities in language comprehension and reasoning that go beyond simple text matching to provide more nuanced and contextual responses, per research like Microsoft’s “Large Search Model: Redefining Search Stack in the Era of LLMs.”

Core functionality in search

The core functionality of LLMs in search is to process queries and produce natural language summaries.

Instead of just extracting information from existing documents, these models can generate comprehensive answers while maintaining accuracy and relevance.

This is achieved through a unified framework that treats all (search-related) tasks as text generation problems.

What makes this approach particularly powerful is its ability to customize answers through natural language prompts. The system first generates an initial set of query results, which the LLM refines and improves.

If additional information is needed, the LLM can generate supplementary queries to collect more comprehensive data.

The underlying processes of encoding and decoding are key to their functionality.

The encoding process

Encoding involves processing and structuring training data into tokens, which are fundamental units used by language models.

Tokens can represent words, n-grams, entities, images, videos or entire documents, depending on the application.

It’s important to note, however, that LLMs do not “understand” in the human sense – they process data statistically rather than comprehending it.

Transforming tokens into vectors

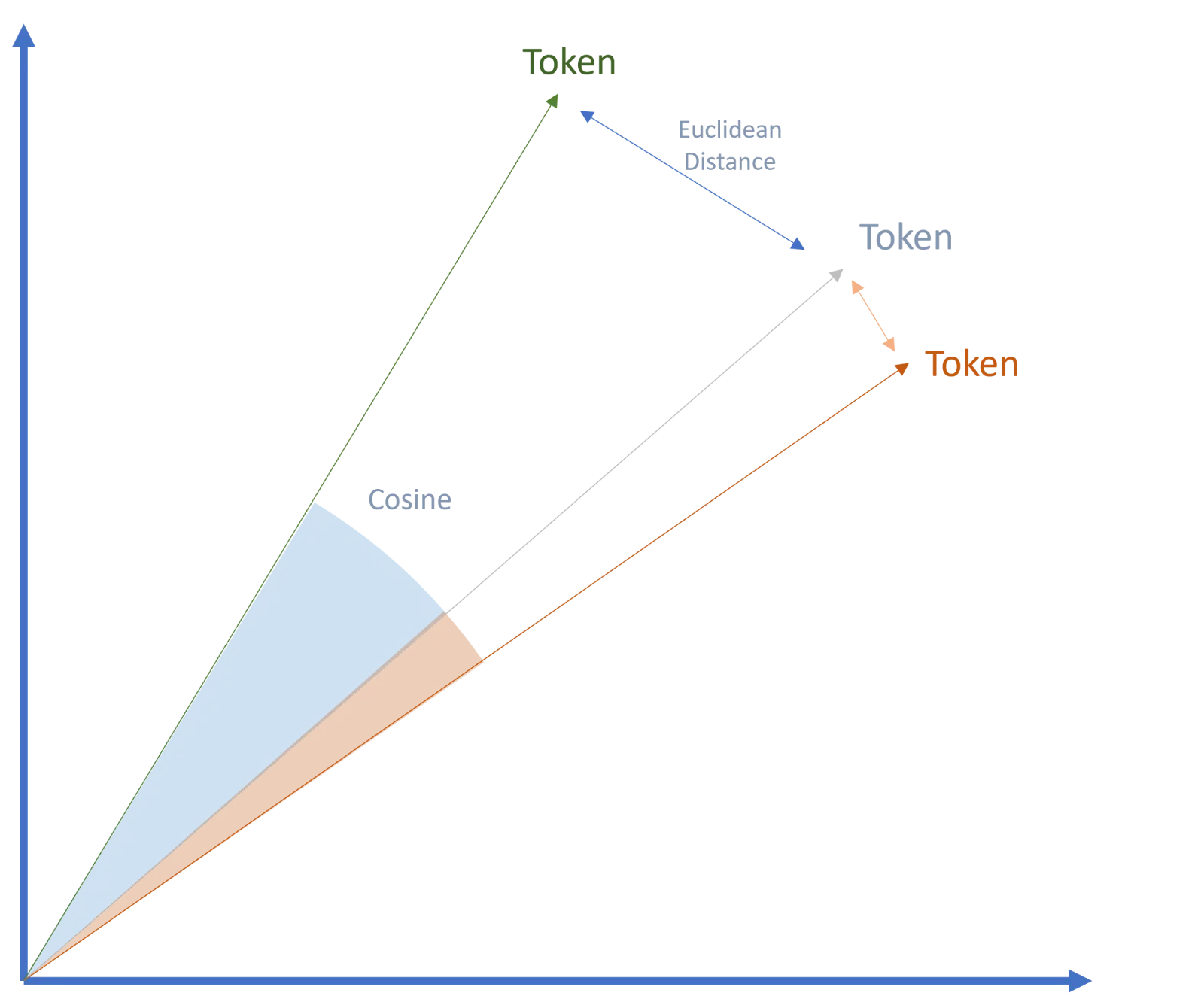

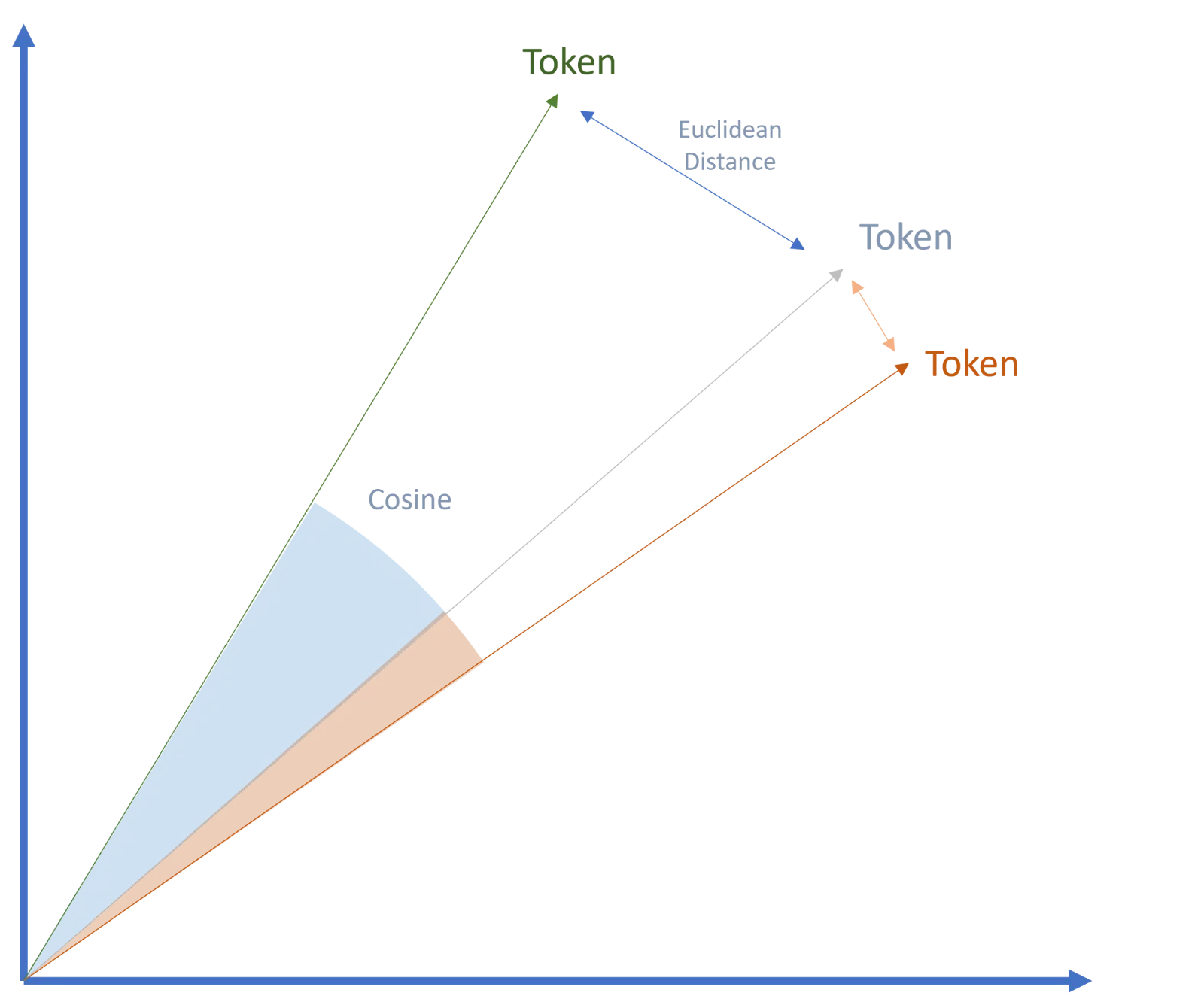

In the next step, tokens are transformed into vectors, forming the foundation of Google’s transformer technology and transformer-based language models.

This breakthrough was a game changer in AI and is a key factor in the widespread adoption of AI models today.

Vectors are numerical representations of tokens, with the numbers capturing specific attributes that describe the properties of each token.

These properties allow vectors to be classified within semantic spaces and related to other vectors, a process known as embeddings.

The semantic similarity and relationships between vectors can then be measured using methods like cosine similarity or Euclidean distance.

The decoding process

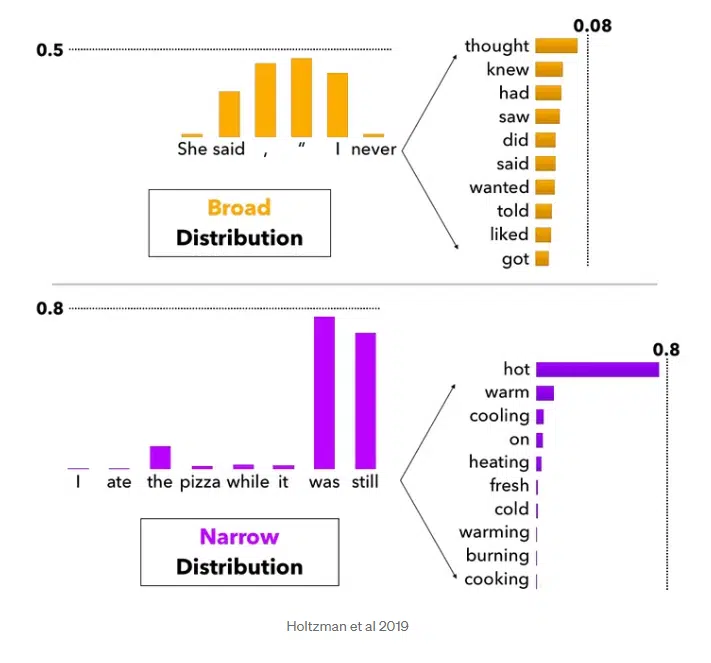

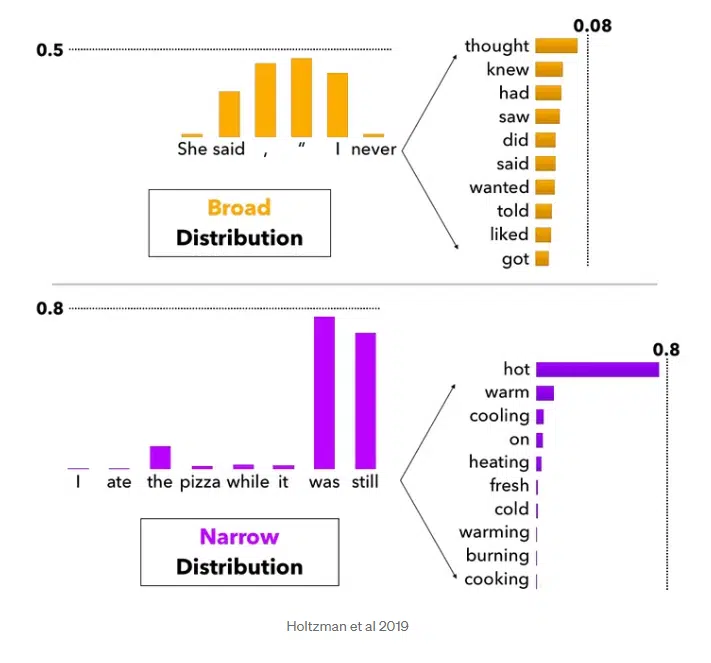

Decoding is about interpreting the probabilities that the model calculates for each possible next token (word or symbol).

The goal is to create the most sensible or natural sequence. Different methods, such as top K sampling or top P sampling, can be used when decoding.

Potentially, subsequent words are evaluated with a probability score. Depending on how high the “creativity scope” of the model is, the top K words are considered as possible next words.

In models with a broader interpretation, the following words can also be taken into account in addition to the Top 1 probability and thus be more creative in the output.

This also explains possible different results for the same prompt. With models that are “strictly” designed, you will always get similar results.

Beyond text: The multimedia capabilities of generative AI

The encoding and decoding processes in generative AI rely on natural language processing.

By using NLP, the context window can be expanded to account for grammatical sentence structure, enabling the identification of main and secondary entities during natural language understanding.

Generative AI extends beyond text to include multimedia formats like audio and, occasionally, visuals.

However, these formats are typically transformed into text tokens during the encoding process for further processing. (This discussion focuses on text-based generative AI, which is the most relevant for GEO applications.)

Dig deeper: How to win with generative engine optimization while keeping SEO top-tier

Challenges and advancements in generative AI

Major challenges for generative AI include ensuring information remains up-to-date, avoiding hallucinations, and delivering detailed insights on specific topics.

Basic LLMs are often trained on superficial information, which can lead to generic or inaccurate responses to specific queries.

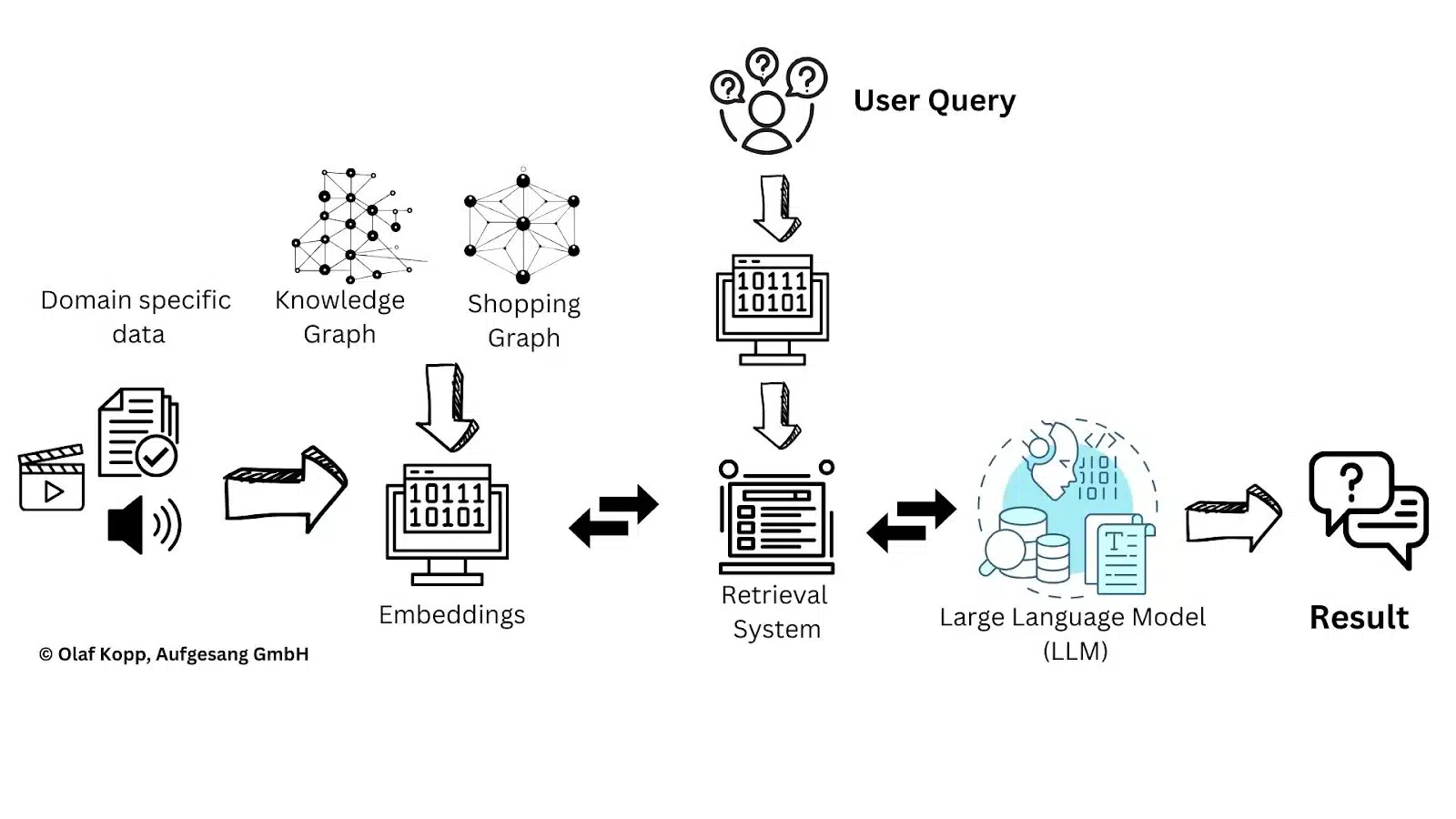

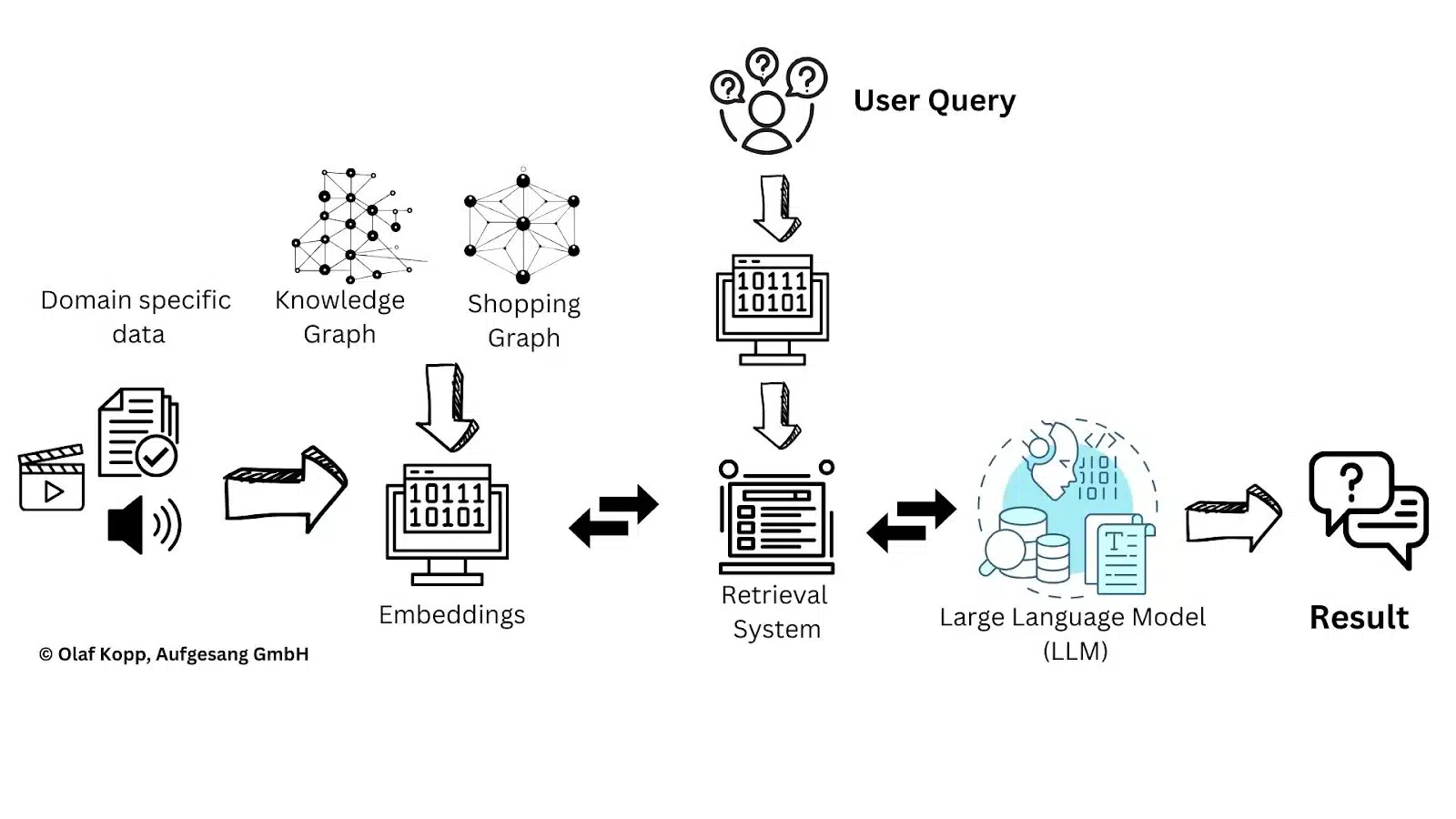

To address this, retrieval-augmented generation has become a widely used method.

Retrieval-augmented generation: A solution to information challenges

RAG supplies LLMs with additional topic-specific data, helping them overcome these challenges more effectively.

In addition to documents, topic-specific information can also be integrated using knowledge graphs or entity nodes transformed into vectors.

This enables the inclusion of ontological information about relationships between entities, moving closer to true semantic understanding.

RAG offers potential starting points for GEO. While determining or influencing the sources in the initial training data can be challenging, GEO allows for a more targeted focus on preferred topic-specific sources.

The key question is how different platforms select these sources, which depends on whether their applications have access to a retrieval system capable of evaluating and selecting sources based on relevance and quality.

The critical role of retrieval models

Retrieval models play a crucial role in the RAG architecture by acting as information gatekeepers.

They search through large datasets to identify relevant information for text generation, functioning like specialized librarians who know exactly which “books” to retrieve on a given topic.

These models use algorithms to evaluate and select the most pertinent data, enabling the integration of external knowledge into text generation. This enhances context-rich language output and expands the capabilities of traditional language models.

Retrieval systems can be implemented through various mechanisms, including:

- Vector embeddings and vector search.

- Document index databases using techniques like BM25 and TF-IDF.

Retrieval approaches of major AI platforms

Not all systems have access to such retrieval systems, which presents challenges for RAG.

This limitation may explain why Meta is now working on its own search engine, which would allow it to leverage RAG within its LLaMA models using a proprietary retrieval system.

Perplexity claims to use its own index and ranking systems, though there are accusations that it scrapes or copies search results from other engines like Google.

Claude’s approach remains unclear regarding whether it uses RAG alongside its own index and user-provided information.

Gemini, Copilot and ChatGPT differ slightly. Microsoft and Google leverage their own search engines for RAG or domain-specific training.

ChatGPT has historically used Bing search, but with the introduction of SearchGPT, it’s uncertain if OpenAI operates its own retrieval system.

OpenAI has stated that SearchGPT employs a mix of search engine technologies, including Microsoft Bing.

“The search model is a fine-tuned version of GPT-4o, post-trained using novel synthetic data generation techniques, including distilling outputs from OpenAI o1-preview. ChatGPT search leverages third-party search providers, as well as content provided directly by our partners, to provide the information users are looking for.”

Microsoft is one of ChatGPT’s partners.

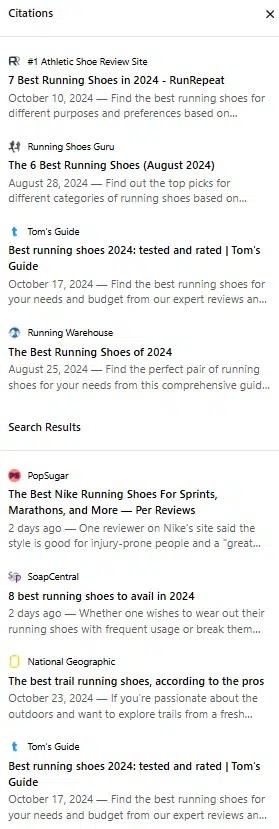

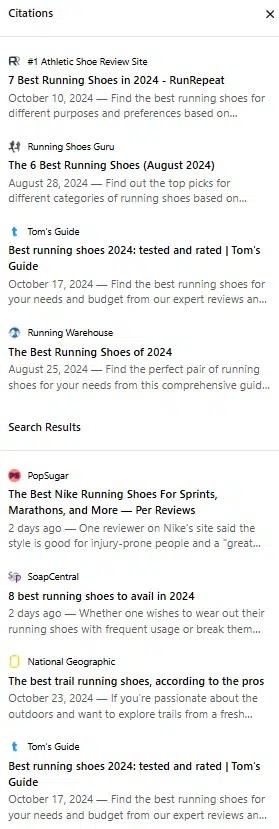

When ChatGPT is asked about the best running shoes, there is some overlap between the top-ranking pages in Bing search results and the sources used in its answers, though the overlap is significantly less than 100%.

Evaluating the retrieval-augmented generation process

Other factors may influence the evaluation of the RAG pipeline.

- Faithfulness: Measures the factual consistency of generated answers against the given context.

- Answer relevancy: Evaluates how pertinent the generated answer is to the given prompt.

- Context precision: Assesses whether relevant items in the contexts are ranked appropriately, with scores from 0-1.

- Aspect critique:Evaluates submissions based on predefined aspects like harmlessness and correctness, with ability to define custom evaluation criteria.

- Groundedness: Measures how well answers align with and can be verified against source information, ensuring claims are substantiated by the context.

- Source references: Having citations and links to original sources allows verification and helps identify retrieval issues.

- Distribution and coverage: Ensuring balanced representation across different source documents and sections through controlled sampling.

- Correctness/Factual accuracy: Whether generated content contains accurate facts.

- Mean average precision (MAP): Evaluates the overall precision of retrieval across multiple queries, considering both precision and document ranking. It calculates the mean of average precision scores for each query, where precision is computed at each position in the ranked results. A higher MAP indicates better retrieval performance, with relevant documents appearing higher in search results.

- Mean reciprocal rank (MRR): Measures how quickly the first relevant document appears in search results. It’s calculated by taking the reciprocal of the rank position of the first relevant document for each query, then averaging these values across all queries. For example, if the first relevant document appears at position 4, the reciprocal rank would be 1/4. MRR is particularly useful when the position of the first correct result matters most.

- Stand-alone quality: Evaluates how context-independent and self-contained the content is, scored 1-5 where 5 means the content makes complete sense by itself without requiring additional context.

Prompt vs. query

A prompt is more complex and aligned with natural language than typical search queries, which are often just a series of key terms.

Prompts are typically framed with explicit questions or coherent sentences, providing greater context and enabling more precise answers.

It is important to distinguish between optimizing for AI Overviews and AI assistant results.

- AI Overviews, a Google SERP feature, are generally triggered by search queries.

- Whereas AI assistants rely on more complex natural language prompts.

To bridge this gap, the RAG process must convert the prompt into a search query in the background, preserving critical context to effectively identify suitable sources.

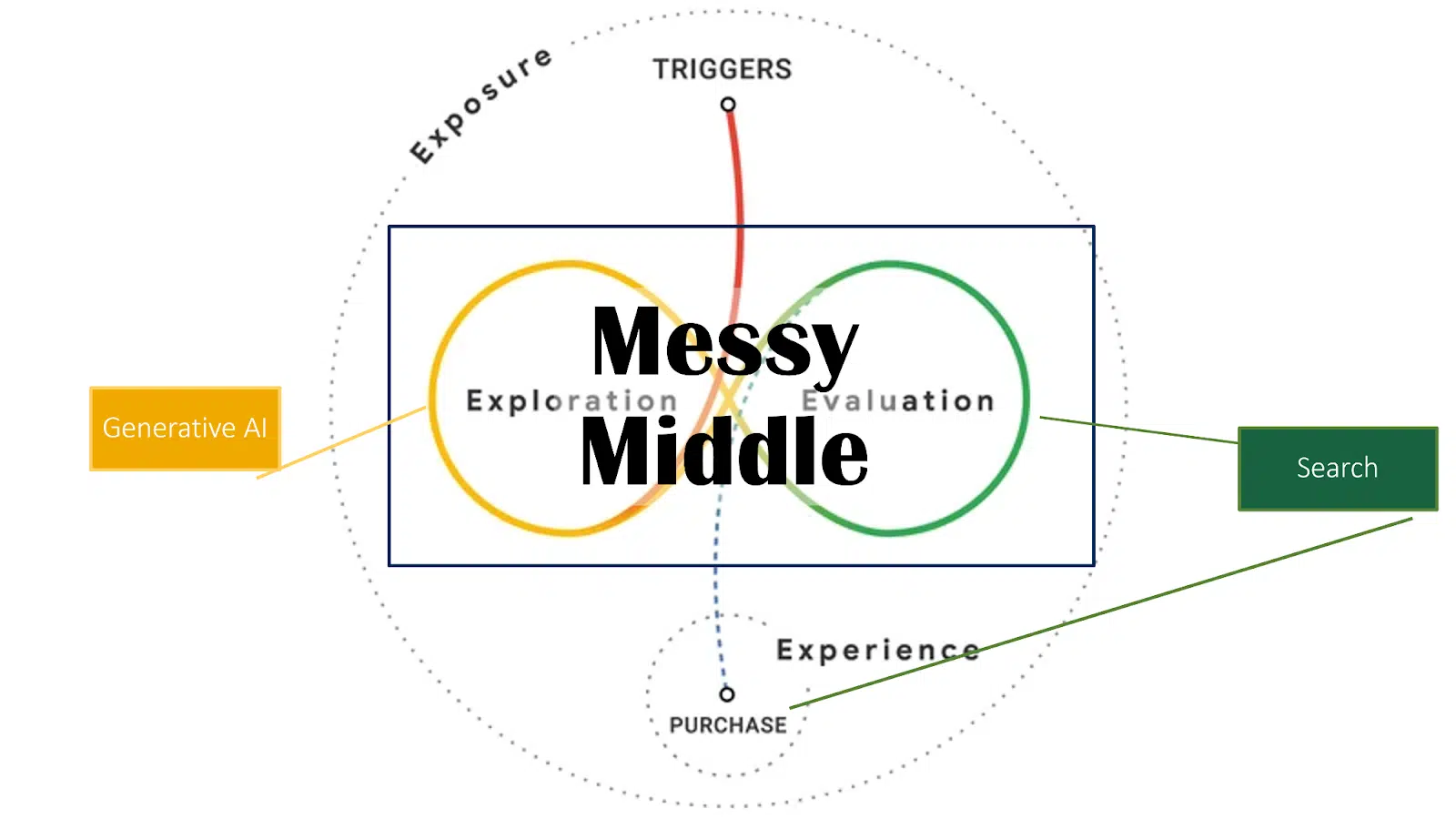

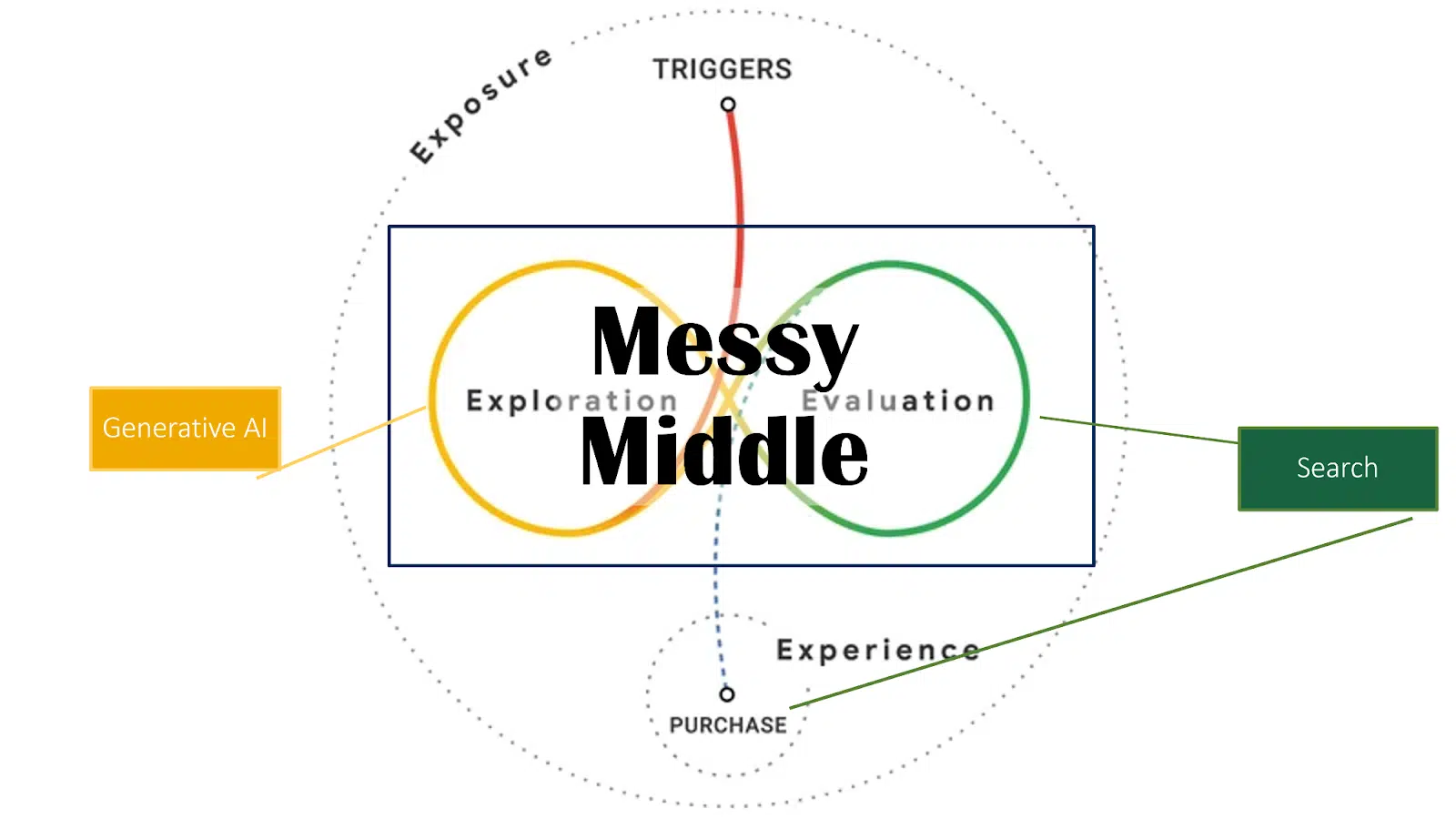

Goals and strategies of GEO

The goals of GEO are not always clearly defined in discussions.

Some focus on having their own content cited in referenced source links, while others aim to have their name, brand or products mentioned directly in the output of generative AI.

Both goals are valid but require different strategies.

- Being cited in source links involves ensuring your content is referenced.

- Whereas mentions in AI output rely on increasing the likelihood of your entity – whether a person, organization or product – being included in relevant contexts.

A foundational step for both objectives is to establish a presence among preferred or frequently selected sources, as this is a prerequisite for achieving either goal.

Do we need to focus on all LLMs?

The varying results of AI applications demonstrate that each platform uses its own processes and criteria for recommending named entities and selecting sources.

In the future, it will likely be necessary to work with multiple large language models or AI assistants and understand their unique functionalities. For SEOs accustomed to Google’s dominance, this will require an adjustment.

Over the coming years, it will be essential to monitor which applications gain traction in specific markets and industries and to understand how each selects its sources.

Why are certain people, brands or products cited by generative AI?

In the coming years, more people will rely on AI applications to search for products and services.

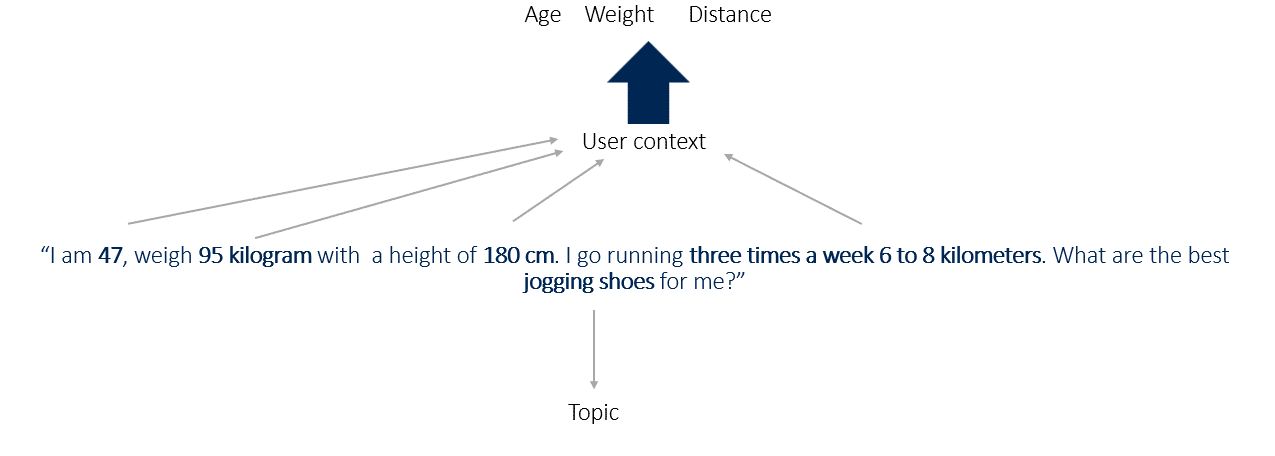

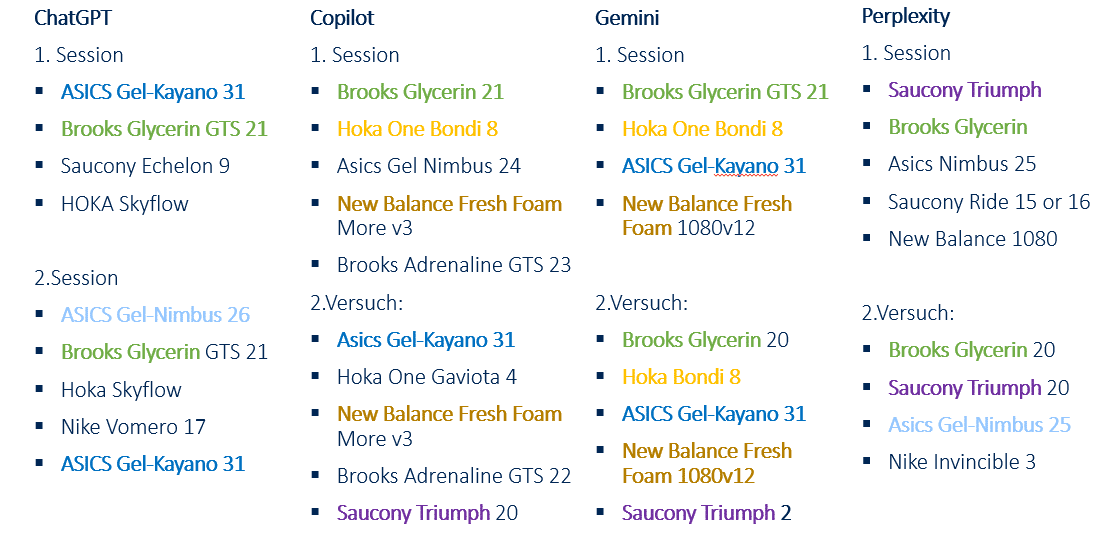

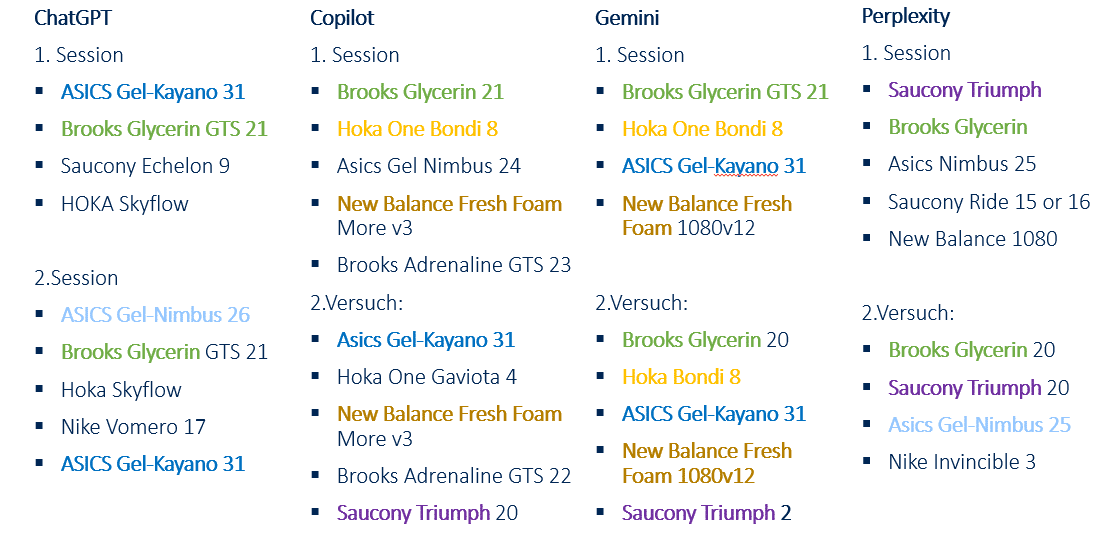

For example, a prompt like:

- “I am 47, weigh 95 kilograms, and am 180 cm tall. I go running three times a week, 6 to 8 kilometers. What are the best jogging shoes for me?”

This prompt provides key contextual information, including age, weight, height and distance as attributes, with jogging shoes as the main entity.

Products frequently associated with such contexts have a higher likelihood of being mentioned by generative AI.

Testing platforms like Gemini, Copilot, ChatGPT and Perplexity can reveal which contexts these systems consider.

Based on the headings of the cited sources, all four systems appear to have deduced from the attributes that I am overweight, generating information from posts with headings like:

- Best Running Shoes for Heavy Runners (August 2024)

- 7 Best Running Shoes For Heavy Men in 2024

- Best Running Shoes for Heavy Men in 2024

- Best running shoes for heavy female runners

- 7 Best Long Distance Running Shoes in 2024

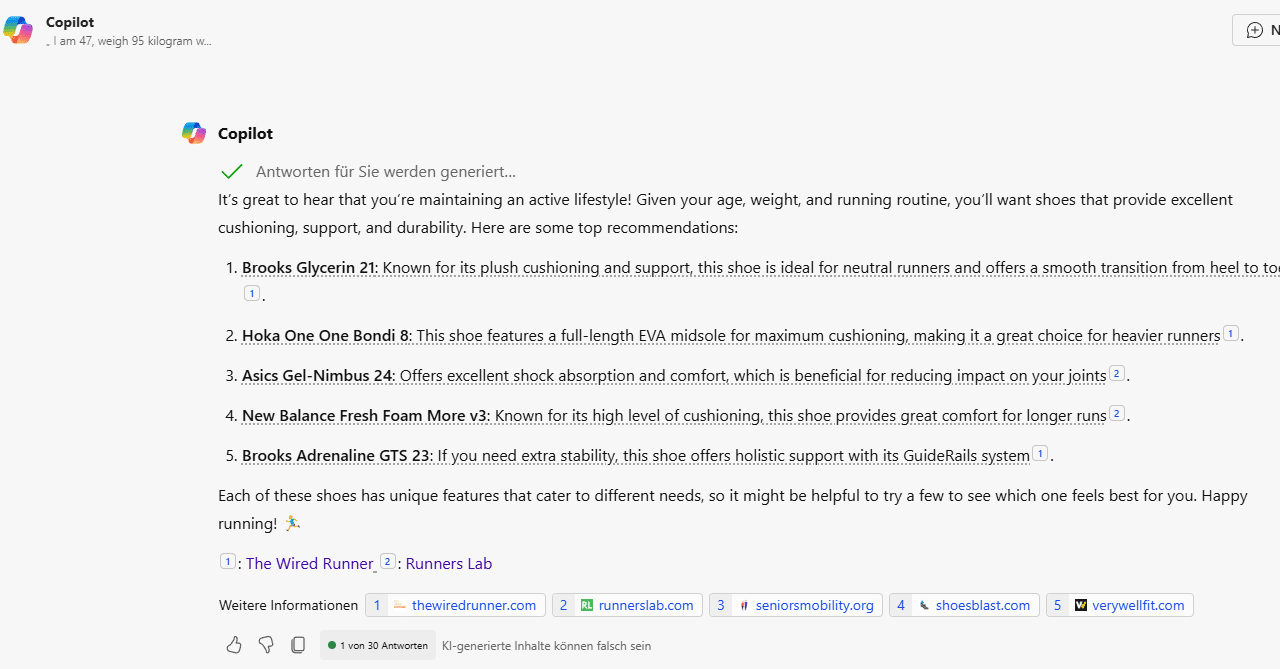

Copilot

Copilot considers attributes such as age and weight.

Based on the referenced sources, it identifies an overweight context from this information.

All cited sources are informational content, such as tests, reviews and listicles, rather than ecommerce category or product detail pages.

ChatGPT

ChatGPT takes attributes like distance and weight into account. From the referenced sources, it derives an overweight and long-distance context.

All cited sources are informational content, such as tests, reviews and listicles, rather than typical shop pages like category or product detail pages.

Perplexity

Perplexity considers the weight attribute and derives an overweight context from the referenced sources.

The sources include informational content, such as tests, reviews, listicles and typical shop pages.

Gemini

Gemini does not directly provide sources in the output. However, further investigation reveals that it also processes the contexts of age and weight.

Each major LLM lists different products, with only one shoe consistently recommended by all four tested AI systems.

All the systems exhibit a degree of creativity, suggesting varying products across different sessions.

Notably, Copilot, Perplexity and ChatGPT primarily reference non-commercial sources, such as shop websites or product detail pages, aligning with the prompt’s purpose.

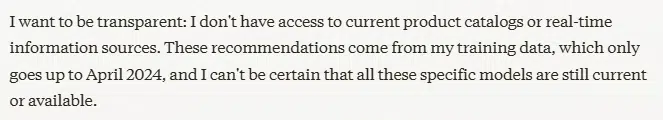

Claude was not tested further. While it also suggests shoe models, its recommendations are based solely on initial training data without access to real-time data or its own retrieval system.

As you can see from the different results, each LLM will have its own process of selecting sources and content, making the GEO challenge even greater.

The recommendations are influenced by co-occurrences, co-mentions and context.

The more frequently specific tokens are mentioned together, the more likely they are to be contextually related.

In simple terms, this increases the probability score during decoding.

Dig deeper: How to gain visibility in generative AI answers: GEO for Perplexity and ChatGPT

Get the newsletter search marketers rely on.

Source and information selection for retrieval-augmented generation

GEO focuses on positioning products, brands and content within the training data of LLMs. Understanding the training process of LLMs is crucial for identifying potential opportunities for inclusion.

The following insights are drawn from studies, patents, scientific documents, research on E-E-A-T and personal analysis. The central questions are:

- How big the influence of the retrieval systems is in the RAG process.

- How important the initial training data is.

- What other factors can play a role.

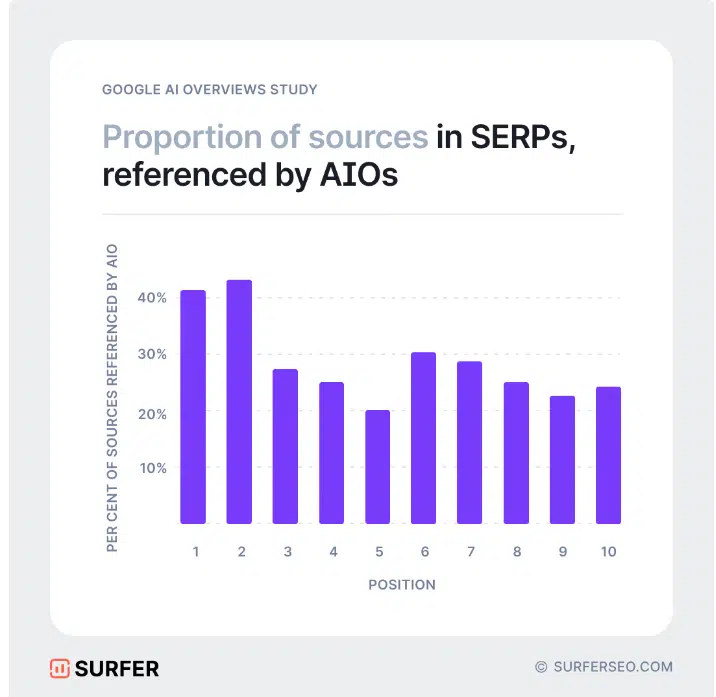

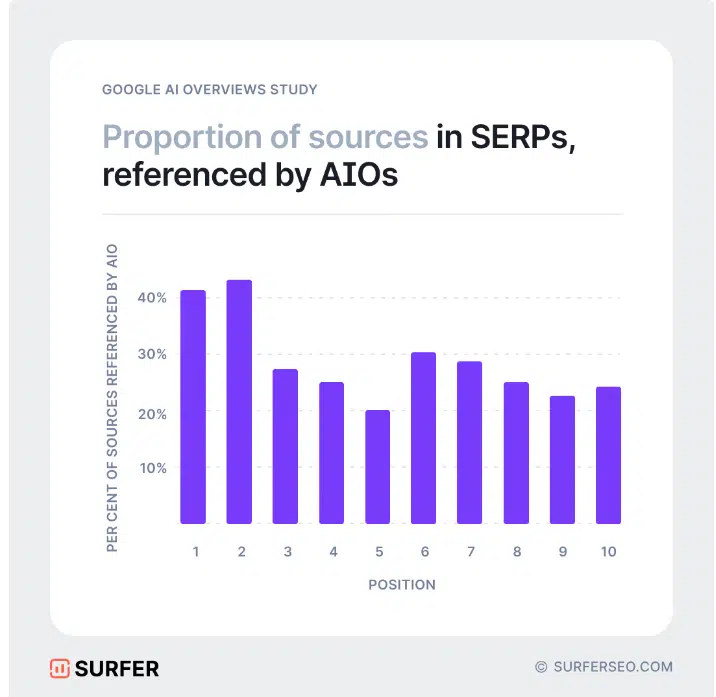

Recent studies, particularly on source selection for AI Overviews, Perplexity and Copilot, suggest overlaps in selected sources.

For example, Google AI Overviews show about 50% overlap in source selection, as evidenced by studies from Rich Sanger and Authoritas and Surfer.

The fluctuation range is very high. The overlap in studies from the beginning of 2024 was still around 15%. However, some studies found a 99% overlap.

The retrieval system appears to influence approximately 50% of the AI Overviews’ results, suggesting ongoing experimentation to improve performance. This aligns with justified criticism regarding the quality of AI Overview outputs.

The selection of referenced sources in AI answers highlights where it is beneficial to position brands or products in a contextually appropriate way.

It’s important to differentiate between sources used during the initial training of models and those added on a topic-specific basis during the RAG process.

Examining the model training process provides clarity. For instance, Google’s Gemini – a multimodal large language model – processes diverse data types, including text, images, audio, video and code.

Its training data comprises web documents, books, code and multimedia, enabling it to perform complex tasks efficiently.

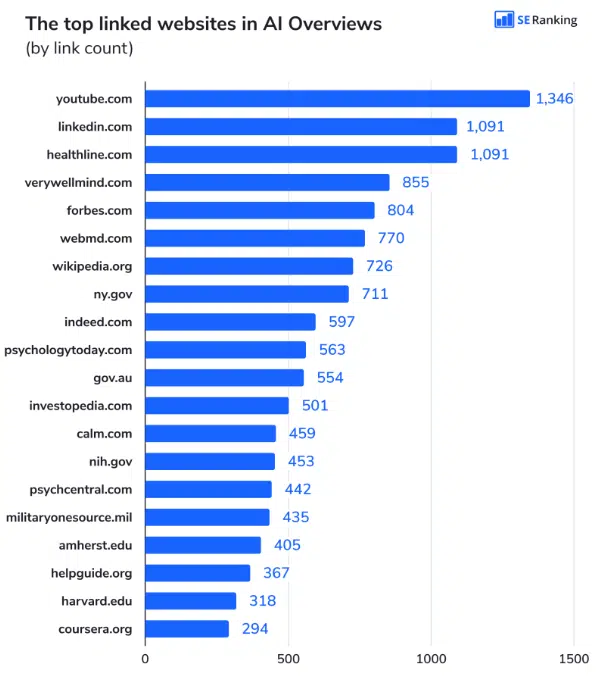

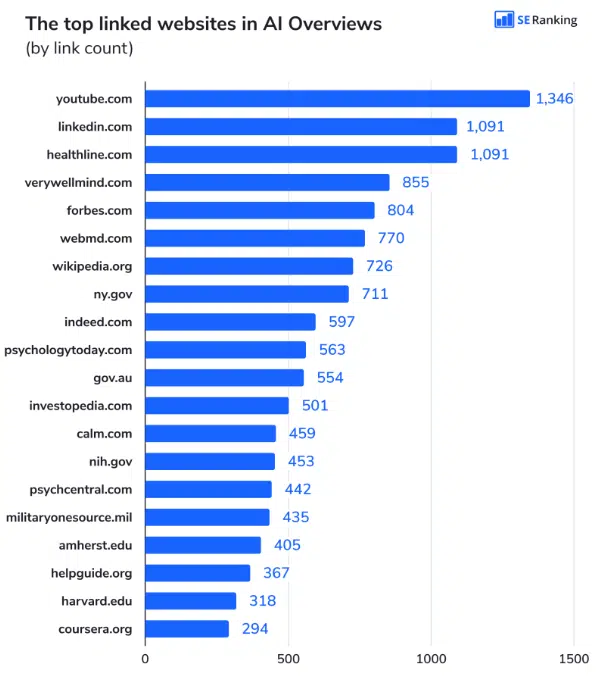

Studies on AI Overviews and their most frequently referenced sources offer insights into which sources Google uses for its indices and knowledge graph during pre-training, providing opportunities to align content for inclusion.

In the RAG process, domain-specific sources are incorporated to enhance contextual relevance.

A key feature of Gemini is its use of a Mixture of Experts (MoE) architecture.

Unlike traditional Transformers, which operate as a single large neural network, an MoE model is divided into smaller “expert” networks.

The model selectively activates the most relevant expert paths based on the input type, significantly improving efficiency and performance.

The RAG process is likely integrated into this architecture.

Gemini is developed by Google through multiple training phases, utilizing publicly available data and specialized techniques to maximize the relevance and precision of its generated content:

Pre-training

- Similar to other large language models (LLMs), Gemini is first pre-trained on various public data sources. Google applies various filters to ensure data quality and avoid problematic content.

- The training considers a flexible selection of likely words, allowing for more creative and contextually appropriate responses.

Supervised fine-tuning (SFT)

- After pre-training, the model is optimized using high-quality examples either created by experts or generated by models and then reviewed by experts.

- This process is similar to learning good text structure and content by seeing examples of well-written texts.

Reinforcement learning from human feedback (RLHF)

- The model is further developed based on human evaluations. A reward model based on user preferences helps Gemini recognize and learn preferred response styles and content.

Extensions and retrieval augmentation

- Gemini can search external data sources such as Google Search, Maps, YouTube or specific extensions to provide contextual information about the response.

- For example, when asked about current weather conditions or news, Gemini could access Google Search directly to find timely, reliable data and incorporate it into the response.

- Gemini performs search results filtering to select the most relevant information for the answer. The model takes into account the contextuality of the query and filters the data so that it fits the question as closely as possible.

- An example of this would be a complex technical question where the model selects results that are scientific or technical in nature rather than using general web content.

- Gemini combines the information retrieved from external sources with the model output.

- This process involves creating an optimized draft response that draws on both the model’s prior knowledge and information from the retrieved data sources.

- The model structures the answer so that the information is logically brought together and presented in a readable manner.

- Each answer undergoes additional review to ensure that it meets Google’s quality standards and does not contain problematic or inappropriate content.

- This security check is complemented by a ranking that favors the best quality versions of the answer. The model then presents the highest-ranked answer to the user.

User feedback and continuous optimization

- Google continuously integrates feedback from users and experts to adapt the model and fix any weak points.

One possibility is that AI applications access existing retrieval systems and use their search results.

Studies suggest that a strong ranking in the respective search engine increases the likelihood of being cited as a source in connected AI applications.

However, as noted, the overlaps do not yet show a clear correlation between top rankings and referenced sources.

Another criterion appears to influence source selection.

Google’s approach, for example, emphasizes adherence to quality standards when choosing sources for pre-training and RAG.

The use of classifiers is also mentioned as a factor in this process.

When naming classifiers, a bridge can be made to E-E-A-T, where quality classifiers are also used.

Information from Google regarding post-training also references using E-E-A-T in classifying sources according to quality.

The reference to evaluators connects to the role of quality raters in assessing E-E-A-T.

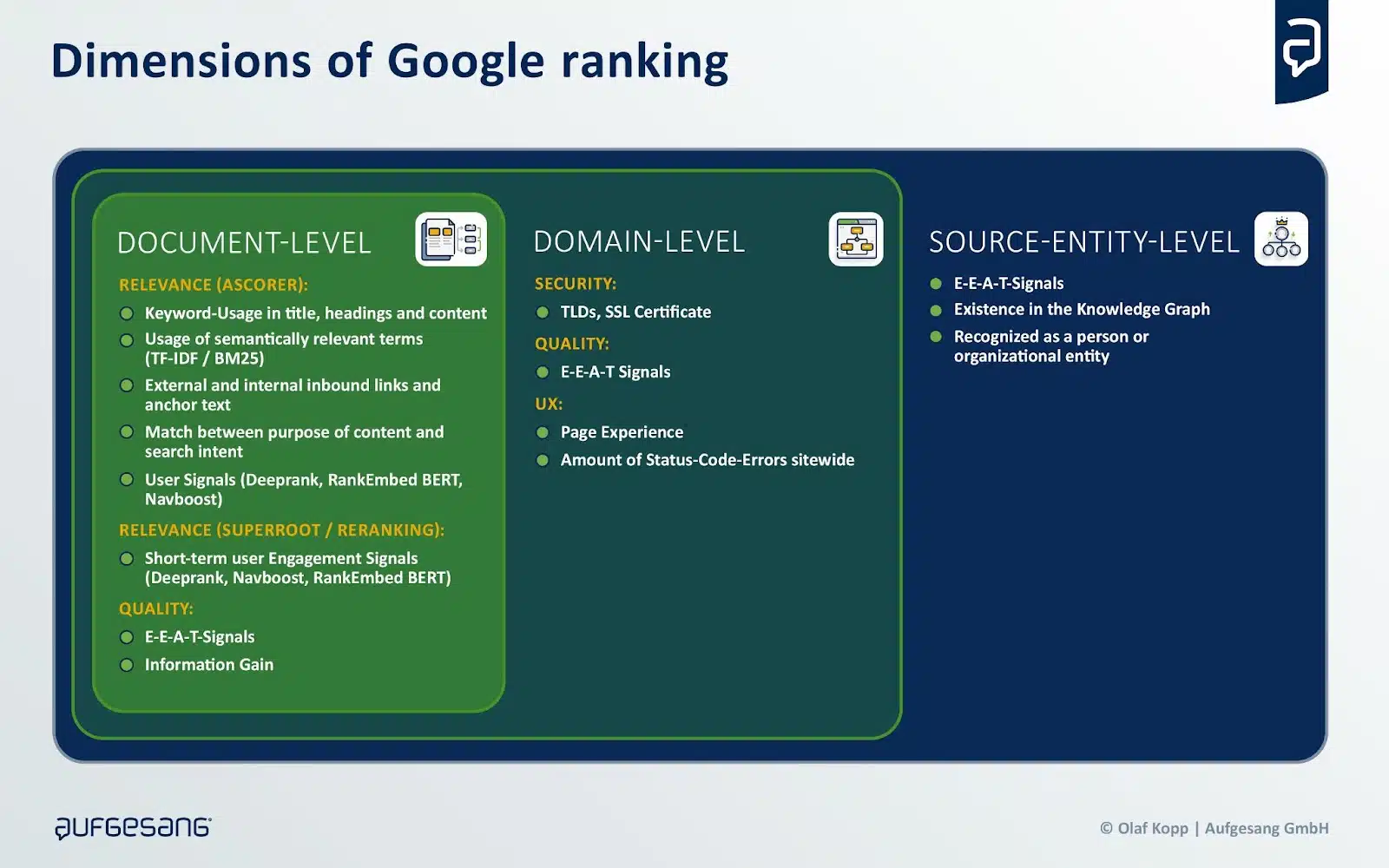

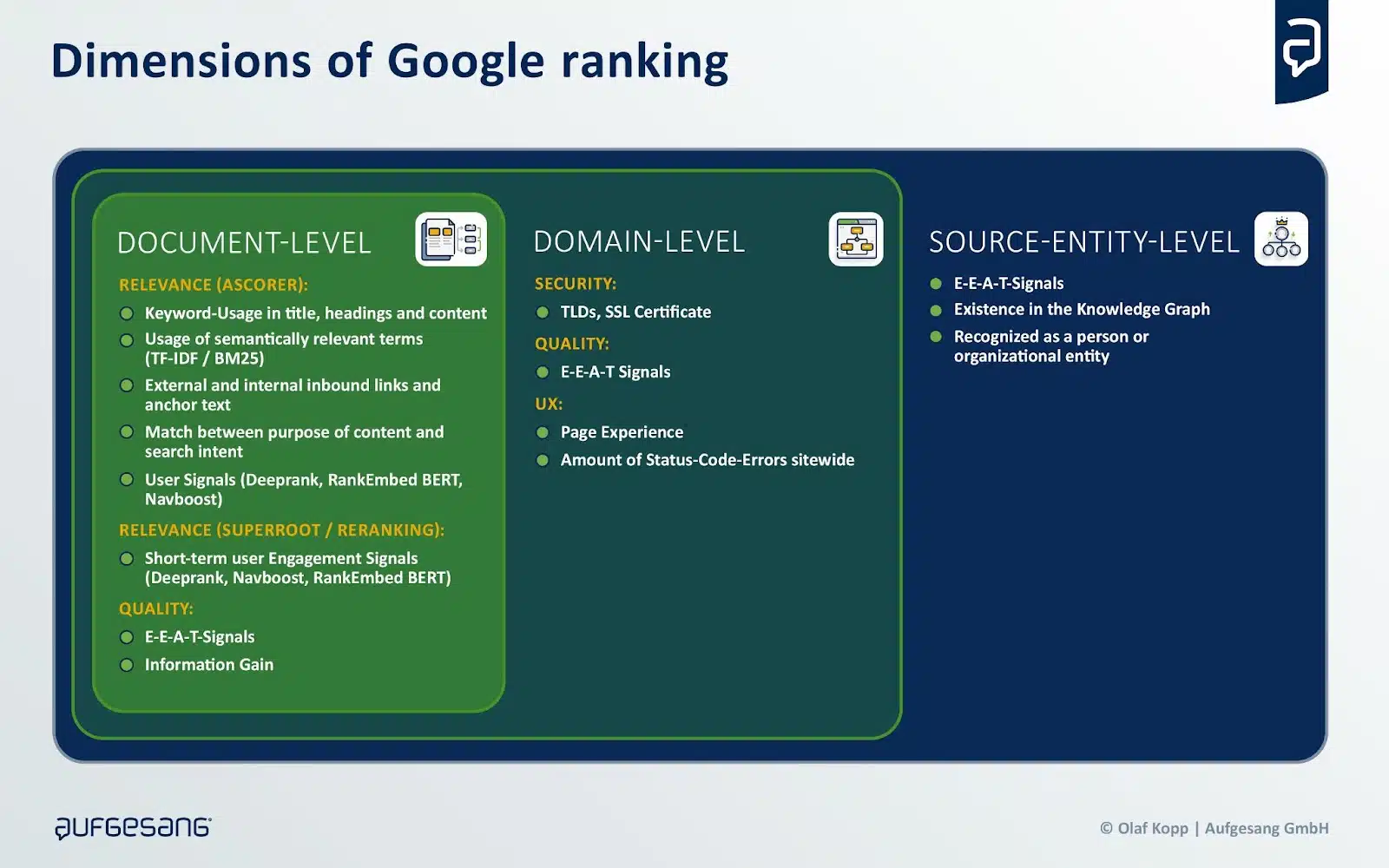

Rankings in most search engines are influenced by relevance and quality at the document, domain and author or source entity levels.

Sources may be chosen less for relevance and more for quality at the domain and source entity level.

This would also make sense, as more complex prompts have to be rewritten in the background so that appropriate search queries are created to query the rankings.

While relevance is query-dependent, quality remains consistent.

This distinction helps explain the weak correlation between rankings and sources referenced by generative AI and why lower-ranking sources are sometimes included.

To assess quality, search engines like Google and Bing rely on classifiers, including Google’s E-E-A-T framework.

Google has emphasized that E-E-A-T varies by subject area, necessitating topic-specific strategies, particularly in GEO strategies.

Referenced domain sources differ by industry or topic, with platforms like Wikipedia, Reddit and Amazon playing varying roles, according to a BrightEdge study.

Thus, industry- and topic-specific factors must be integrated into positioning strategies.

Dig deeper: How to implement generative engine optimization (GEO) strategies

Tactical and strategic approaches for LLMO / GEO

As previously noted, there are no proven success stories yet for influencing the results of generative AI.

Platform operators themselves seem uncertain about how to qualify the sources selected during the RAG process.

These points underscore the importance of identifying where optimization efforts should focus – specifically, determining which sources are sufficiently trustworthy and relevant to prioritize.

The next challenge is understanding how to establish yourself as one of those sources.

The research paper “GEO: Generative Engine Optimization” introduced the concept of GEO, exploring how generative AI outputs can be influenced and identifying the factors responsible for this.

According to the study, the visibility and effectiveness of GEO can be enhanced by the following factors:

- Authority in writing: Improves performance, particularly on debate questions and queries in historical contexts, as more persuasive writing is likely to have more value in debate-like contexts.

- Citations (cite sources): Particularly beneficial for factual questions, as they provide a source of verification for the facts presented, thereby increasing the credibility of the answer.

- Statistical addition: Particularly effective in fields such as Law, Government and Opinion, where incorporating relevant statistics into webpage content can enhance visibility in specific contexts.

- Quotation addition: Most impactful in areas like People and Society, Explanations and History, likely because these topics often involve personal narratives or historical events where direct quotes add authenticity and depth.

These factors vary in effectiveness depending on the domain, suggesting that incorporating domain-specific, targeted customizations into web pages is essential for increased visibility.

The following tactical dos for GEO and LLMO can be derived from the paper:

- Use citable sources: Incorporate citable sources into your content to increase credibility and authenticity, especially factual ones

- Insert statistics: Add relevant statistics to strengthen your arguments, especially in areas like Law and Government and opinion questions.

- Add quotes: Use quotes to enrich content in areas such as People and Society, Explanations and History as they add authenticity and depth.

- Domain-specific optimization: Consider the specifics of your domain when optimizing, as the effectiveness of GEO methods varies depending on the area.

- Focus on content quality: Focus on creating high-quality, relevant and informative content that provides value to users.

Additionally, tactical don’ts can also be identified:

- Avoid keyword stuffing: Traditional keyword stuffing shows little to no improvement in generative search engine responses and should be avoided.

- Don’t ignore the context: Avoid generating content that is unrelated to the topic or does not provide any added value for the user.

- Don’t overlook user intent: Don’t neglect the intent behind search queries. Make sure your content actually answers users’ questions.

BrightEdge has outlined the following strategic considerations based on the aforementioned research:

Different impacts of backlinks and co-citations

- AI Overviews and Perplexity favor distinct domain sets depending on the industry.

- In healthcare and education, both platforms prioritize trusted sources like mayoclinic.org and coursera.com, making these or similar domains key targets for effective SEO strategies.

- Conversely, in sectors like ecommerce and finance, Perplexity shows a preference for domains such as reddit.com, yahoo.com, and marketwatch.com.

- Tailoring SEO efforts to these preferences by leveraging backlinks and co-citations can significantly enhance performance.

Tailored strategies for AI-powered search

- AI-powered search approaches must be customized for each industry.

- For instance, Perplexity’s preference for reddit.com underscores the importance of community insights in ecommerce, while AI Overviews leans toward established review and Q&A sites like consumerreports.org and quora.com.

- Marketers and SEOs should align their content strategies with these tendencies by creating detailed product reviews or fostering Q&A forums to support ecommerce brands.

Anticipate changes in the citation landscape

- SEOs must closely monitor Perplexity’s preferred domains, especially the platform’s reliance on reddit.com for community-driven content.

- Google’s partnership with Reddit could influence Perplexity’s algorithms to prioritize Reddit’s content further. This trend indicates a growing emphasis on user-generated content.

- SEOs should remain proactive and adaptable, refining strategies to align with Perplexity’s evolving citation preferences to maintain relevance and effectiveness.

Below are industry-specific tactical and strategic measures for GEO.

B2B tech

- Establish a presence on authoritative tech domains, particularly techtarget.com, ibm.com, microsoft.com and cloudflare.com, which are recognized as trusted sources by both platforms.

- Leverage content syndication on these established platforms to get cited as a trusted source faster.

- In the long term, build your own domain authority through high-quality content, as competition for syndication spots will increase.

- Enter into partnerships with leading tech platforms and actively contribute content there.

- Demonstrate expertise through credentials, certifications and expert opinions to signal trustworthiness.

Ecommerce

- Establish a strong presence on Amazon, as Perplexity’s platform is widely used as a source.

- Actively promote product reviews and user-generated content on Amazon and other relevant platforms.

- Distribute product information via established dealer platforms and comparison sites

- Syndicate content and partner with trusted domains.

- Maintain detailed and up-to-date product descriptions on all sales platforms.

- Get involved on relevant specialist portals and community platforms such as Reddit.

- Pursue a balanced marketing strategy that relies on both external platforms and your own domain authority.

Continuing education

- Build trustworthy sources and collaborate with authoritative domains such as coursera.org, usnews.com and bestcolleges.com, as these are considered relevant by both systems.

- Create up-to-date, high-quality content that AI systems classify as trustworthy. The content should be clearly structured and supported by expert knowledge.

- Build an active presence on relevant platforms like Reddit as community-driven content becomes increasingly important.

- Optimize your own content for AI systems through clear structuring, clear headings and concise answers to common user questions.

- Clearly highlight quality features such as certifications and accreditations, as these increase credibility.

Finance

- Build a presence on trustworthy financial portals such as yahoo.com and marketwatch.com, as these are preferred sources by AI systems.

- Maintain current and accurate company information on leading platforms such as Yahoo Finance.

- Create high-quality, factually correct content and support it with references to recognized sources.

- Build an active presence in relevant Reddit communities as Reddit gains traction as a source for AI systems.

- Enter into partnerships with established financial media to increase your own visibility and credibility.

- Demonstrate expertise through specialist knowledge, certifications and expert opinions.

Health

- Link and reference content to trusted sources such as mayoclinic.org, nih.gov and medlineplus.gov.

- Incorporate current medical research and trends into the content.

- Provide comprehensive and well-researched medical information backed by official institutions.

- Rely on credibility and expertise through certifications and qualifications.

- Conduct regular content updates with new medical findings.

- Pursue a balanced content strategy that both builds your own domain authority and leverages established healthcare platforms.

Insurance

- Use trustworthy sources: Place content on recognized domains such as forbes.com and official government websites (.gov), as these are considered particularly credible by AI search engines.

- Provide current and accurate information: Insurance information must always be current and factually correct. This particularly applies to product and service descriptions.

- Content syndication: Publish content on authoritative platforms such as Forbes or recognized specialist portals in order to be cited as a trustworthy source more quickly.

- Emphasize local relevance: Content should be adapted to regional markets and take local insurance regulations into account.

Restaurants

- Build and maintain a strong presence on key review platforms such as Yelp, TripAdvisor, OpenTable and GrubHub.

- Actively promote and collect positive ratings and reviews from guests.

- Provide complete and up-to-date information on these platforms (menus, opening times, photos, etc.).

- Interact with food communities and specialized gastro platforms such as Eater.com.

- Perform local SEO optimization as AI searches place a strong emphasis on local relevance.

- Create and update comprehensive and well-maintained Wikipedia entries.

- Offer a seamless online reservation process via relevant platforms.

- Provide high-quality content about the restaurant on various channels.

Tourism / Travel

- Optimize presence on key travel platforms such as TripAdvisor, Expedia, Kayak, Hotels.com and Booking.com, as they are viewed as trusted sources by AI search engines.

- Create comprehensive content with travel guides, tips and authentic reviews.

- Optimize the booking process and make it user-friendly.

- Perform local SEO since AI searches are often location-based.

- Be active on relevant platforms and encourage reviews.

- Providing high-quality content with added value for the user.

- Collaborate with trusted domains and partners.

The future of GEO and what it means for brands

The significance of GEO for companies hinges on whether future generations will adapt their search behavior and shift from Google to other platforms.

Emerging trends in this area should become apparent in the coming years, potentially affecting the search market share.

For instance, ChatGPT Search relies heavily on Microsoft Bing’s search technology.

If ChatGPT establishes itself as a dominant generative AI application, ranking well on Microsoft Bing could become critical for companies aiming to influence AI-driven applications.

This development could offer Microsoft Bing an opportunity to gain market share indirectly.

Whether LLMO or GEO will evolve into a viable strategy for steering LLMs toward specific goals remains uncertain.

However, if it does, achieving the following objectives will be essential:

- Establishing owned media as a source for LLM training data through E-E-A-T principles.

- Generating mentions of the brand and its products in reputable media.

- Creating co-occurrences of the brand with relevant entities and attributes in authoritative media.

- Producing high-quality content that ranks well and is considered in RAG processes.

- Ensuring inclusion in established graph databases like the Knowledge Graph or Shopping Graph.

The success of LLM optimization correlates with market size. In niche markets, it is easier to position a brand within its thematic context due to reduced competition.

Fewer co-occurrences in qualified media are required to associate the brand with relevant attributes and entities in LLMs.

Conversely, in larger markets, achieving this is more challenging because competitors often have extensive PR and marketing resources and a well-established presence.

Implementing GEO or LLMO demands significantly greater resources than traditional SEO, as it involves influencing public perception at scale.

Companies must strategically prepare for these shifts, which is where frameworks like digital authority management come into play. This concept helps organizations align structurally and operationally to succeed in an AI-driven future.

In the future, large brands are likely to hold substantial advantages in search engine rankings and generative AI outputs due to their superior PR and marketing resources.

However, traditional SEO can still play a role in training LLMs by leveraging high-ranking content.

The extent of this influence depends on how retrieval systems weigh content in the training process.

Ultimately, companies should prioritize the co-occurrence of their brands/products with relevant attributes and entities while optimizing for these relationships in qualified media.

Dig deeper: 5 GEO trends shaping the future of search

Contributing authors are invited to create content for Search Engine Land and are chosen for their expertise and contribution to the search community. Our contributors work under the oversight of the editorial staff and contributions are checked for quality and relevance to our readers. The opinions they express are their own.

#visible #generative #search #results